“‘We Didn’t Vote for ChatGPT’ – Swedish PM’s AI Experiment Sparks a Quiet Revolution in Governance”

When Sweden’s Prime Minister leaned on ChatGPT for political advice, the move didn’t just raise eyebrows – it ignited a debate about the future of democracy, privacy, and the role of AI in leadership [1]. The irony? In a system where citizens elect leaders to think, we’re now asking a chatbot to advise. As one observer noted, “If AI could run a country, we’d have already elected a chatbot.”

The Limits of AI in Politics: Can a Bot Reason?

AI today is a tool, not a sage. While it can summarize data or draft emails, it lacks the contextual wisdom to weigh complex political dilemmas. ChatGPT might suggest policies, but it can’t feel the weight of a decision, gauge public sentiment, or navigate the messy human dynamics of governance. As Simone Fischer-Hübner, a computer science researcher at Karlstad University, warned: “You have to be very careful” when handling sensitive information with tools hosted beyond your control.

The scary part: When AI Becomes a Puppeteer

The real danger isn’t AI’s lack of reasoning – it’s the possibility of AI being used to manipulate. Imagine a future where AI tools subtly steer a leader’s decisions, amplifying biases or embedding hidden agendas. This isn’t just a plot twist in a dystopian novel – it’s a warning. The stakes? A world where governance is shaped not by human judgment, but by algorithms with opaque motives.

The Way Forward: Sovereignty, Simplicity, and Trust

To reclaim control, we need systems that prioritize data sovereignty, secure integration, and transparency. Here’s how:

- Data Sovereignty: Own What’s Yours

Sensitive information – policy drafts, citizen data, strategic plans – should never leave national or corporate firewalls. By anchoring AI systems on-premise, governments and organizations can ensure their data stays protected from foreign servers and unseen hands. - Low-Code Integration, High-Code Security

AI tools should empower, not complicate. A low-code platform lets teams integrate external data seamlessly, while robust security ensures that every interaction is auditable and governed by admins. - Knowledge as a Service (KaaS)

Imagine your organization’s curated knowledge being shared securely via APIs, accessible to others yet fully overseen by your admins. This is the future of collaboration: AI-driven, but human-governed. - AI Agents: Automate with Accountability

When AI proves reliable in repetitive tasks – analyzing data, drafting reports, or even composing messages – it should automate those tasks. But with transparency: every action traceable, every decision explainable.

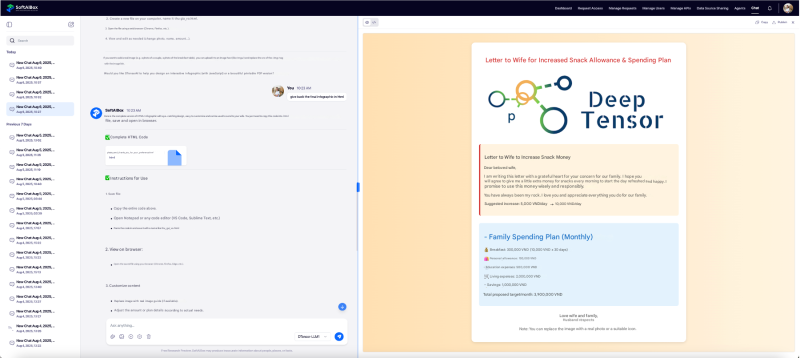

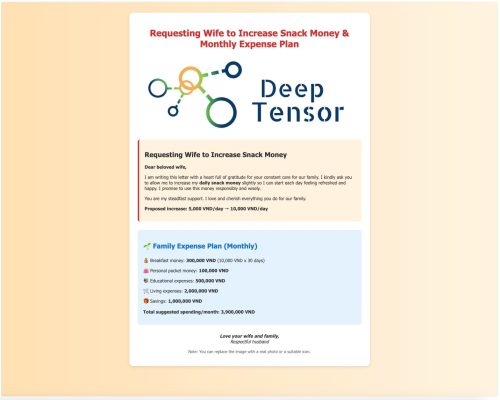

SoftAIBox: A Private AIBox for a Public Future

At DeepTensor AB, we built SoftAIBox with these principles in mind. It’s a private, on-premise AI system that lets organizations scale their AI capabilities without sacrificing control. One of my first thoughts when testing it? How can I use this to make their lives better? Since most of the team are married and identify as He/Him, I asked SoftAIBox to draft a message to their wives – hoping their daily allowance request gets approved! Here is the result:

(Zoom-In)

(Zoom-In)

Or you can click here to see the original output: https://www.deeptensor.ai/files/demo/letter2wife.html

Conclusion

The Swedish PM’s ChatGPT experiment isn’t the end of democracy – it’s a call to action. We must build systems that augment human judgment, not replace it. By prioritizing data sovereignty, secure integration, and transparent automation, we can ensure AI remains a tool for empowerment, not a puppeteer for control. After all, we didn’t vote for ChatGPT – but we can vote for a future where AI serves us, not the other way around.

#SoftAIBox #DeepTensorAI

Contact US if you want to learn more about SoftAIBox or to book a demo: https://www.deeptensor.ai/contact-us/

[1] Original news: https://www.theguardian.com/technology/2025/aug/05/chat-gpt-swedish-pm-ulf-kristersson-under-fire-for-using-ai-in-role